When building or upgrading a PC, most people focus on GPUs, CPUs, and SSDs — but few realize that PCI Express (PCIe) lanes are the invisible highways that connect these parts together. They determine how fast your GPU communicates with your CPU, how many NVMe SSDs you can use without bottlenecks, and whether your next upgrade will actually perform as expected.

This article breaks down what PCIe lanes are, how they work, what’s new in PCIe Gen4 and Gen5, and how to make smarter choices when planning your system.

What Is a PCIe Lane?

A PCIe lane is the fundamental communication channel of the PCI Express interface.

Each lane is made up of two pairs of wires — one pair for transmitting data and another for receiving — which makes PCIe full-duplex (data can flow in both directions at once).

Multiple lanes can be grouped to create wider connections, which you often see labeled as ×1, ×4, ×8, or ×16.

×1: Suitable for small add-in cards like Wi-Fi or capture cards.

×4: Used by NVMe SSDs and M.2 slots.

×16: Dedicated to GPUs for maximum bandwidth.

In short, more lanes = more bandwidth.

For more info on what is a PCIe Lane, our previous article has a more detailed explanation on the topic.

CPU-Supported Lanes vs Chipset Lanes

Not all PCIe lanes are created equal.

Modern systems have two sources of PCIe lanes:

CPU Lanes: Directly connected to the processor. These offer the lowest latency and highest bandwidth.

Chipset (PCH) Lanes: Routed through the motherboard’s chipset, which connects back to the CPU using a link (called DMI on Intel or Infinity Fabric on AMD).

Because chipset lanes share that single DMI link, they can become a bottleneck when multiple high-speed devices — like NVMe SSDs or capture cards — compete for bandwidth.

For example, a typical AMD Ryzen or Intel Core CPU may provide 16–20 direct lanes, usually allocated as:

16 lanes for the GPU (x16 slot)

4 lanes for the main NVMe SSD

The rest used for the chipset link

Everything else — USB controllers, SATA ports, Wi-Fi modules — connects through the chipset’s slower lanes.

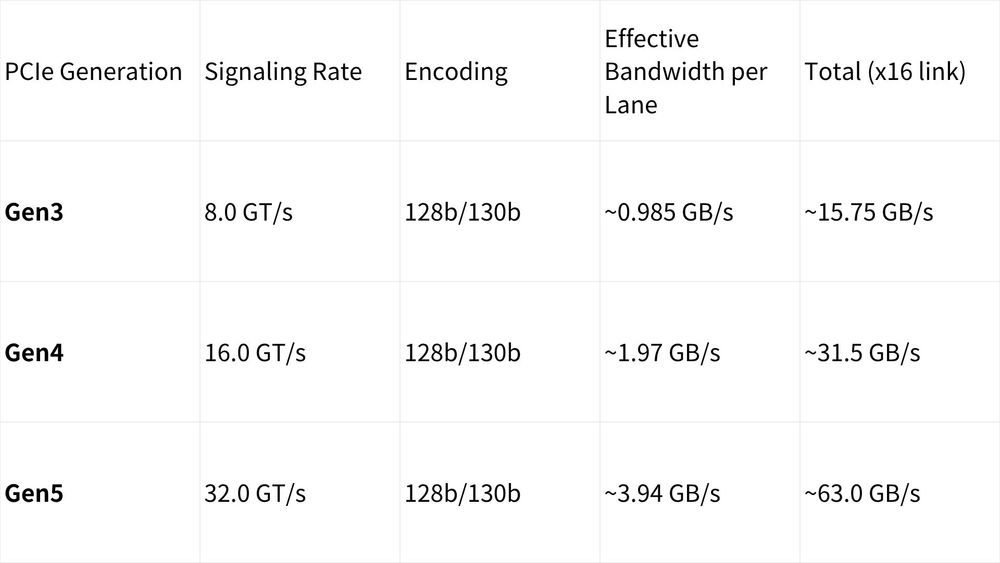

PCIe Generations and Their Speeds

Each new PCIe generation doubles the data rate per lane compared to the previous one.

Let’s look at the numbers:

(GT/s = gigatransfers per second)

In simple terms:

A PCIe Gen4 ×8 slot is about as fast as a Gen3 ×16 slot.

A Gen5 ×8 slot already exceeds Gen4 ×16 speeds — meaning even fewer lanes can deliver massive throughput.

This is why the latest GPUs and SSDs can achieve incredible performance even with fewer allocated lanes.

PCIe Gen4 vs Gen5: The Engineering Leap

PCIe Gen5 isn’t just “twice as fast.” It’s also much harder to design for.

At 32 GT/s, every bit of signal loss matters. Motherboard manufacturers must use:

High-grade PCB materials (low-loss laminates)

Shorter trace lengths

Redrivers or Retimers to maintain signal integrity

Precise impedance control during routing

That’s why Gen5 motherboards are more expensive — they need advanced engineering to handle those speeds without data errors.

For PC builders, the takeaway is this:

You won’t “feel” Gen5 speed in everyday tasks unless you’re pushing ultra-high-bandwidth workloads (e.g., 3D rendering, AI training, multi-NVMe RAID). But you will benefit from future-proofing.

Real-World Example: Lane Allocation in Modern CPUs

Let’s visualize a typical setup using a modern Intel or AMD CPU:

[CPU]

│

├── PCIe x16 → GPU

├── PCIe x4 → NVMe SSD (M.2 slot)

└── PCIe x4 → Chipset link (DMI / Fabric)

│

├── USB ports

├── SATA drives

├── Secondary NVMe slots

└── Network / Wi-Fi cards

When you install a second NVMe drive, your motherboard might share or split lanes — sometimes reducing your GPU from ×16 to ×8. While this doesn’t usually affect gaming performance much (Gen4 ×8 = Gen3 ×16), it’s important to check your motherboard manual to see which slots share bandwidth.

Why PCIe Lanes Matter

Even with ultra-fast components, bandwidth bottlenecks can occur if you overload the chipset or split CPU lanes inefficiently.

Here’s how lane management affects performance:

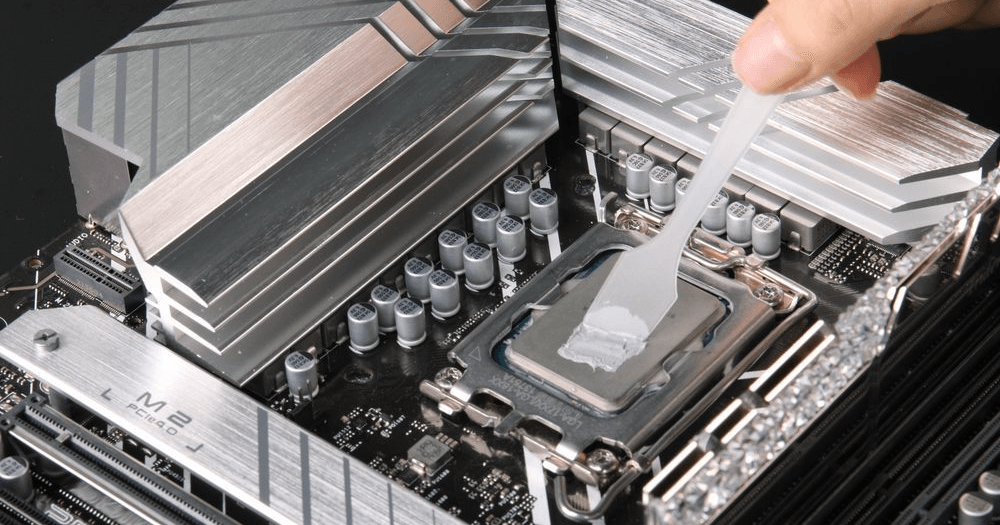

GPU bandwidth: Critical for rendering and compute tasks.

Storage bandwidth: Impacts NVMe SSD transfer rates, especially in RAID.

Add-in cards: Capture cards, NICs, and accelerators can easily saturate chipset lanes.

Efficient use of CPU lanes ensures each device has a clear, fast path to the processor.

That’s why high-end platforms like AMD Threadripper or Intel Xeon offer 48–128 lanes — perfect for multi-GPU or workstation setups.

Future Outlook: PCIe Gen6 and Beyond

The next step, PCIe Gen6, will double speeds again to 64 GT/s per lane using PAM4 (Pulse Amplitude Modulation) signaling — a huge shift in how signals are transmitted.

However, this brings even greater design challenges:

Higher power consumption

More expensive PCB materials

Increased need for error correction (FLIT encoding)

While it may take time before Gen6 reaches consumer PCs, enterprise servers and data centers will adopt it first — paving the way for the next generation of GPUs and SSDs.

Practical Tips for PC Builders

Check lane sharing: Before buying your motherboard, verify which M.2 slots or PCIe slots share lanes with your GPU.

Use CPU lanes for critical devices: Your GPU and main NVMe drive should always occupy CPU-direct lanes.

Don’t obsess over Gen5 (yet): Unless your workload is heavily I/O-bound, Gen4 offers plenty of bandwidth.

Future-proof smartly: Choose a motherboard that supports both Gen4 and Gen5 — it ensures compatibility for years to come.

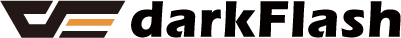

Balance cooling and bandwidth: High-speed PCIe devices generate extra heat; good airflow and heatsinks are essential.

Conclusion

PCIe lanes are the circulatory system of your PC — unseen, but vital. Understanding how many lanes your CPU offers, and how they’re allocated, can make the difference between a balanced build and one full of hidden bottlenecks.

As PCIe Gen5 becomes mainstream and Gen6 looms on the horizon, knowing how these lanes work will help you make smarter hardware decisions — whether you’re building a gaming powerhouse, a creative workstation, or a high-speed storage server.